You will be doing the project a big favor if you will invest some time to patiently see what factors cause a jump from 20 ms to 200 ms of latency. It has to be done in steps since your investigations so far indicate that it isn’t DAW related. It could be some combination of interface, routing and particular effects or apps. Knowing which of those are responsible will help the developer find and address the issue.

I understand that the baseline test we did is not your use case. As I explained, we start simple to establish a baseline and then bit by bit bring the configuration closer to your use case to see where the latency comes from. That first test was a first test – it just established a baseline.

Since other people aren’t reporting this kind of latency, the developer can’t know where to start looking without our help. As beta testers, that is how we help the developer make it solid.

Just saying “I get 200 ms of latency with 4 different DAWs” provides no useful information that the developer can use. Since you now know that in a simple setup, you have 20 ms of latency, you can help figure out what causes that jump in latency and provide the information so that Pascal can address the issue.

You ask “why is it happening?”

We won’t know without applying some creativity to figuring out what changes result in your going from less than 20 ms of latency to 200ms of latency.

It will require some patience and creativity to figure it out.

It could be some combination of interface, routing, the particular effects – but some time needs to be spent to figure out which of those factors are involved.

As I explained, I AM GETTING ON THE ORDER OF 25 MS ROUNDTRIP LATENCY sending my guitar signal in realtime through the iPad.

Why are you getting radically different results? You need to investigate that. The information will no doubt be valuable. I don’t doubt that you are having terrible performanc, but let’s figure out why.

The baseline test is just there to show that the simple round-trip doesn’t have 200 ms latency.

There will be several steps to figuring it out.

NEXT STEP: realtime input with same setup

The next one is to use the built-in mic on your audio track and record both the direct signal on the track where you currently have your recording and the effected signal on another track in realtime. When I did this I had the metronome turned on. I

My results were the same. So, realtime roundtrip with no effects in the way on the iPad was the same as using a recorded source.

The usefulness of this as a test is that it means that there isn’t an inherent different in realtime input from a mic and usin recorded material. This is good to know because it will make testing down the line simpler.

STEP AFTER THAT:

Use your audio interface with the same buffer size settings as used for the built-in driver. For the test, if you have an interface that allows it to be connected to multiple device, have it connected to just the Mac.

Now repeat those first two tests and see what the latency is.

This is to determine to what extent the driver of the interface is involved.

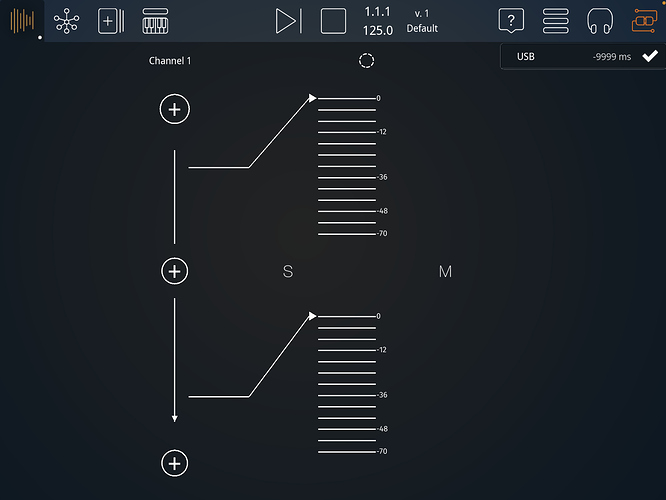

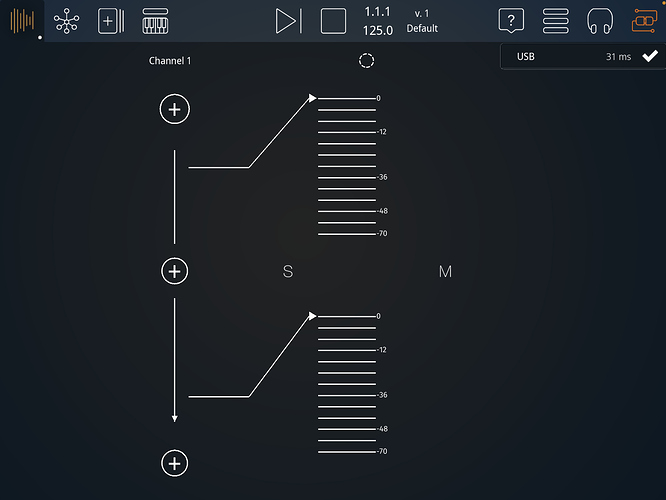

P.S. This morning I am getting closer to 35 ms roundtrip latency with the same set-up. I also inserted THU and ToneBoosters EQ as effects in StudioMux host on the iPad with no difference. Overall. (Using 128 buffer on iPad).

I did encounter one instance where SMUX seems not to have been able to keep up and there was a crackle after which the latency was about doubled. I rebooted both devices and haven’t been able to reproduce that yet.